name: inverse

layout: true

class: center, middle, inverse

---

# Such blocking very concurrency, wow

???

thinking of calling it "such writers block, very concurrency"

---

class: center

layout: false

# Follow along

http://shairosenfeld.com/such_blocking_very_concurrency/

# http://shairosenfeld.com/sbvc/

Slides are going to be dense...

---

name: whoami

layout: false

.left-column[

# Who Am I

]

.right-column[

- Work at [Engine Yard](http://engineyard.com)

- I code on [github / shaiguitar](http://github.com/shaiguitar)

- Tweet at me [@shaiguitar](http://twitter.com/shaiguitar)

- Other boring info on my [personal website](http://shairosenfeld.com)

]

---

template: inverse

.headernote[

## Such blocking very concurrency, wow

]

Cats.

---

template: inverse

.headernote[

## Such blocking very concurrency, wow

]

Cats.

Tech talks must have cats in them.

---

class: center

.left-column[

## Cats

]

---

class: center

.left-column[

## Cats

]

---

class: center

.left-column[

## Cats

]

---

template: inverse

.headernote[

## Such blocking very concurrency, wow

]

How do you scale?

???

since this is a talk about scaling, I was thinking I should give a talk about concurrency! that's how you scale, right?

---

class: center, middle

---

class: center, middle

---

template: inverse

.headernote[

## Such blocking very concurrency, wow

]

How do you scale?

Do more things at once!

---

.left-column[

## Agenda

]

.right-column[

.center-height[

- Talk about all the ways you can do more things at once!

]

]

---

.left-column[

## Agenda

]

.right-column[

All of the ways!

]

---

.left-column[

## Agenda

]

.right-column[

- Concurrency and Parallelism.

]

---

.left-column[

## Agenda

]

.right-column[

All of the ways!

]

---

.left-column[

## Agenda

]

.right-column[

- Concurrency and Parallelism.

Models...

- Actors.

- Shared memory (mutexes, STM).

- Communicating sequential processes aka CSP.

- Futures/Promises/Dataflow variables.

- Coroutines/Continuations.

- Evented IO/multiplexing.

- ......

]

---

.left-column[

## Agenda

]

.right-column[

All of the ways!

]

---

.left-column[

## Agenda

]

.right-column[

- Petri nets

- Process Calculi

- Ambient calculus

- Calculus of Communicating Systems

- π-calculus

- Join-calculus

- ......

]

---

.left-column[

## Agenda

]

.right-column[

]

---

But I wanna...

---

name:agenda

.left-column[

## Agenda

So...for realsies!

]

.right-column[

- A concurrency patterns Tour

- General concepts

- Models

- Pros/Cons

]

---

name:agenda

.left-column[

## Agenda

So...for realsies!

]

.right-column[

- A concurrency patterns

Tour

- General concepts

- Models

- Pros/Cons

]

---

template: inverse

# General Concepts

## common ingredients

---

template: inverse

# Models

## some famous ones

---

template: inverse

# Advantages/Disadvantages

## pros and cons

---

template: inverse

# Let you sit with it

## people spend lifetimes on this stuff

---

template: inverse

# Let you sit with it

## people spend lifetimes on this stuff

## "exercise for the reader"

---

name: tour_general

template: inverse

.headernote[

## General Concepts / Terminology

]

- Concurrency/Parallelism

- OS mechanisms

- Scheduling

- Communication

---

template: inverse

.headernote[

## General Concepts / Terminology

]

## Concurrency vs Parallelism

What is the difference?

---

name:concurrency_vs_parallel_conclusion

.left-column[

## Concurrency tour

- General concepts / Terminology

- Concurrency vs Parallelism

]

.right-column[

http://www.haskell.org/haskellwiki/Parallelism_vs._Concurrency

- Warning: Not all programmers agree on the meaning of the terms 'parallelism' and 'concurrency'. They may define them in different ways or do not distinguish them at all.

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Concurrency vs Parallelism

]

.right-column[

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Concurrency vs Parallelism

]

.right-column[

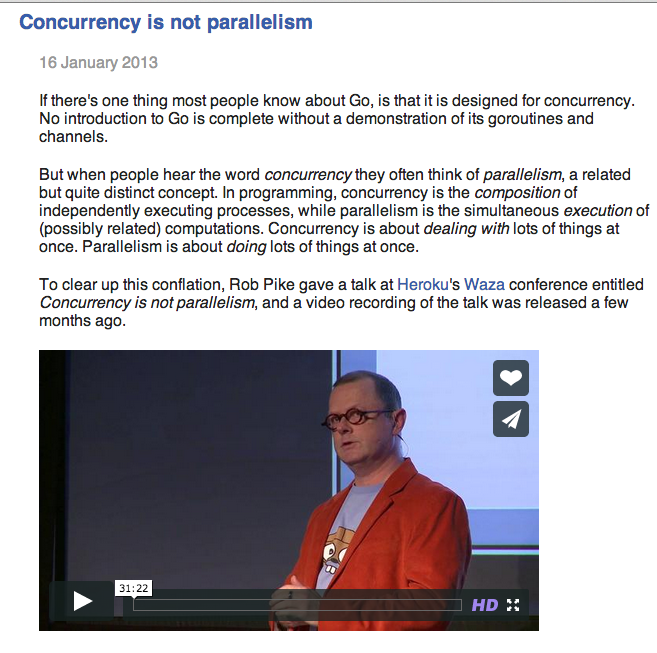

http://blog.golang.org/concurrency-is-not-parallelism

- Concurrency is about

dealing with lots of things at once.

- Parallelism is about doing lots of things

at once.

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Concurrency vs Parallelism

]

.right-column[

Monty Python

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Concurrency vs Parallelism

]

.right-column[

Monty Python

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Concurrency vs Parallelism

]

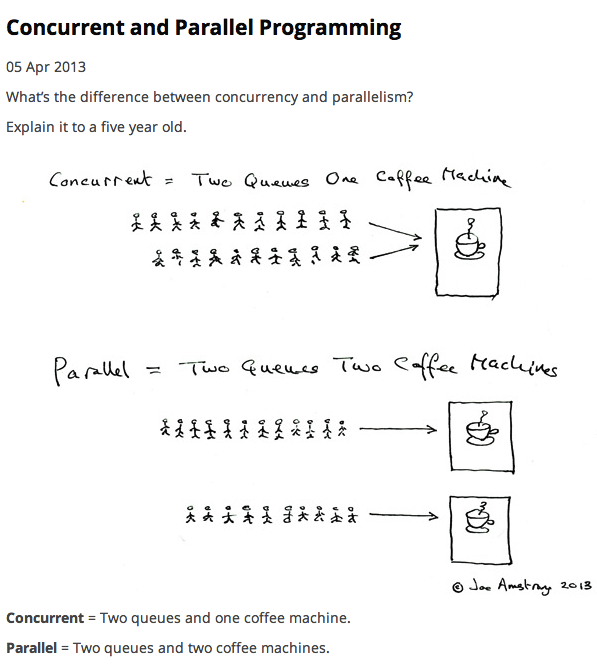

.right-column[

Joe Armstrong, Erlang

http://joearms.github.io/2013/04/05/concurrent-and-parallel-programming.html

http://www.youtube.com/watch?v=uKfKtXYLG78

]

???

guy in the middle means serious biz.

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Concurrency vs Parallelism

]

.right-column[

]

---

name:concurrency_vs_parallel_conclusion

.left-column[

## Concurrency tour

- General concepts / Terminology

- Concurrency vs Parallelism

]

.right-column[

http://stackoverflow.com/questions/1050222/concurrency-vs-parallelism-what-is-the-difference

- Parallelism: A condition that arises when at least two threads are executing

simultaneously.

- Concurrency: A more

generalized form of parallelism that can include time-slicing as a form of virtual parallelism.

]

???

A simplified conclusion:

- Concurrency ⊇ Parallelism *

* not actually true ([Flynn's taxonomy](http://en.wikipedia.org/wiki/Flynn%27s_taxonomy))

my unprofessional simplified conclusion is kinda incorrect ( although: http://programmers.stackexchange.com/questions/155109/parallelism-implies-concurrency-but-not-the-other-way-round-right ). But it's simplified. it's not really a subset. See:

http://en.wikipedia.org/wiki/Flynn%27s_taxonomy

---

template: inverse

.headernote[

## General Concepts / Terminology

]

## OS mechanisms

How are things executed?

???

Process is NOT a Concurrent Process in the generic term.

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- OS mechanisms

]

.right-column[

- Threads and processes

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- OS mechanisms

- Processes

]

.right-column[

- OS Processes

### ps aux | grep a_process

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- OS mechanisms

- Threads

]

.right-column[

- Native Threads

http://www.yolinux.com/TUTORIALS/LinuxTutorialPosixThreads.html#BASICS

All threads within a process share the same address space.

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- OS mechanisms

- Threads

]

.right-column[

- "Green" Threads

http://en.wikipedia.org/wiki/Green_threads

Scheduled by a virtual machine (VM) without relying on any native OS capabilities.

]

???

maybe expand a bit about fibers, talk more about it later: http://en.wikipedia.org/wiki/Fiber_(computer_science)#Operating_system_support

not strictly related to OS, more like a thread that's cooperative

template: inverse

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- OS mechanisms

- Processes

]

.right-column[

- VM Processes (eg, erlang processes)

Similar to green threads but don't share memory.

]

???

It's inception because it's a process within a process.

expand on goroutines, erlang processes, the generic term of a "process" in regards to concurrency

inception because: process within a process...so that's like a green thread, but it can be different, because no shared state like threads

http://stackoverflow.com/questions/2708033/technically-why-are-processes-in-erlang-more-efficient-than-os-threads

"Erlang processes correspond (approximately) to green threads in other languages; there's no OS-enforced separation between the processes. (There may well be language-enforced separation, but that's a lesser protection despite Erlang doing a better job than most.) Because they're so much lighter-weight, they can be used far more extensively.

OS threads on the other hand are able to be simply scheduled on different CPU cores, and are (mostly) able to support independent CPU-bound processing. OS processes are like OS threads, but with much stronger OS-enforced separation. The price of these capabilities is that OS threads and (even more so) processes are more expensive."

---

template:inverse

.headernote[

## General Concepts / Terminology

]

## Scheduling

How do you switch around between the executing things?

---

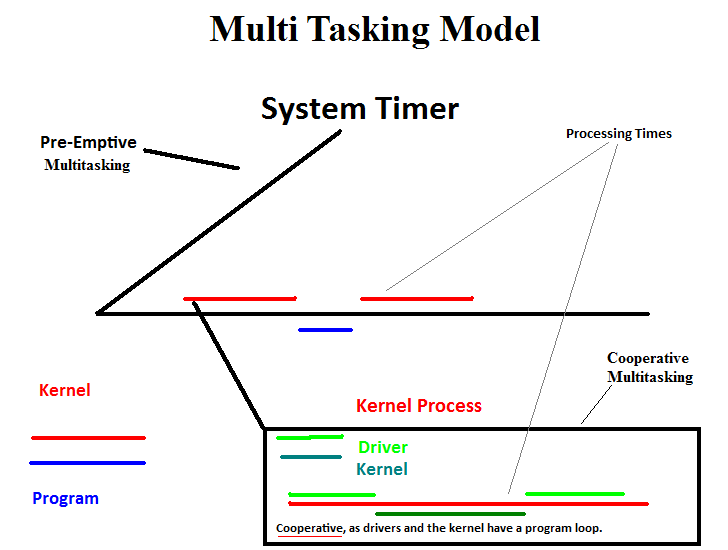

.left-column[

## Concurrency tour

- General concepts / Terminology

- Scheduling

]

.right-column[

- Preemtive

- Cooperative

]

???

exapand on differences of blocking semantics within those?

@CT Scheduling:

coperative multitasking vs premtive scheduling

cooperative multitasking

"Because a cooperatively multitasked system relies on each process regularly giving up time to other processes on the system,

one poorly designed program can consume all of the CPU time for itself or cause the whole system to hang.

in ruby - fibers, continuations, etc.

premtive scheduling

"Preemptive multitasking allows the computer system to more reliably guarantee each process a regular "slice" of operating time."

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Scheduling

- Preemtive

]

.right-column[

- Preemtive

]

???

Interrupts

It's like Bush, attacks can happen at any time, and for no good reason

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Scheduling

- Preemtive

]

.right-column[

Anything can happen at any time...

http://en.wikipedia.org/wiki/Preemption_%28computing%29

"In computing, preemption is the act of temporarily interrupting a task being carried out by a computer system, without requiring its cooperation."

]

???

Interrupts http://en.wikipedia.org/wiki/Interrupt are preemptive.

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Scheduling

- Cooperative

]

.right-column[

- Cooperative

]

???

Essentially, a fiber/coroutine

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Scheduling

- Cooperative

]

.right-column[

http://en.wikipedia.org/wiki/Scheduling_%28computing%29

"It

relied on the program to end or tell the OS that it didn't need the processor so that it could move on to another process. This is usually called cooperative multitasking."

http://en.wikipedia.org/wiki/Computer_multitasking#Cooperative_multitasking

"Each user wanted to see their program running as if it were the only program in the computer. The use of time sharing made this possible, with the qualification that the computer might not seem as fast to any one user as it really would be if it were running only that user's program."

]

---

template: inverse

.headernote[

## General Concepts / Terminology

]

## Communication

How do the executing things not trip over each other?

---

.left-column[

## Concurrency tour

- General Concepts

- Atomicity

]

.right-column[

Problems happen (in some environments) because operations are not atomic:

```ruby

foo =+ 1

actually translates to

1) read foo into TMP

2) increment TMP (whatever calculation is needed)

3) write back foo to curr TMP value

```

http://en.wikipedia.org/wiki/Atomicity_%28programming%29

```ruby

- Thread1:

- read variable / eq 0

- increment variable / eq 1

- Thread2:

- read variable / eq 0

- Thread1:

- write variable / eq 1

- Thread2:

- increment variable / eq 1

- write variable / eq 1

```

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Communicate

]

.right-column[

]

---

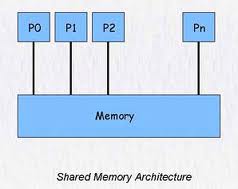

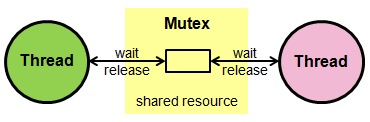

.left-column[

## Concurrency tour

- General concepts / Terminology

- Communicate

- Shared Memory

]

.right-column[

- Shared Memory

]

???

Atomicity is commonly enforced by mutual exclusion, whether at the hardware level building on a cache coherency protocol, or the software level using semaphores or locks.

- http://en.wikipedia.org/wiki/Atomic_operation

(MyMutex?, hardware support)

@CT Communication Shared Mem:

synchronization over shared memory:

http://en.wikipedia.org/wiki/Dekker%27s_algorithm

http://en.wikipedia.org/wiki/Peterson's_algorithm

http://en.wikipedia.org/wiki/Test-and-set

http://en.wikipedia.org/wiki/Atomic_operation

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Communicate

- Shared Memory

]

.right-column[

http://en.wikipedia.org/wiki/Shared_memory

"In computing, shared memory is memory that may be simultaneously accessed by multiple programs with an intent to provide communication among them or avoid redundant copies. Shared memory is an

efficient means of passing data between programs."

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Communicate

- Channels / Message Passing

]

.right-column[

- Message Passing/Channels

]

???

@CT Communication Channels:

!! http://en.wikipedia.org/wiki/Back_pressure !!

It's like what you use ever day!

unix pipes...

the internet! sockets...

Hidden from programmer

Message passsing/Channels

a channel is really a synchronous message passing (passing messages over a stream, in a sync manner: rendezvous).

message passing is _kind of_ a async way to communicate over channels (if no stream is necessary, so it's not really a channel).

types of message passing: blocking/non blocking, order matters??

can expand on message passing being a kind of subtype of channel (async buffered). or, it can be seen as channels being a type of message passing...related, but not the same (similar to parallel vs concurrent?)

just expand on types of channels:

block/non or sync/non sync (same in this context?)

buffered/non buffered

bounded/unbounded

"A key characteristic of channels is that they are blocking. In the most primitive form, an unbuffered channel acts as a rendezvous, any reader will await a writer and vice-versa. Buffering can be introduced, but unbounded buffering is discouraged, as bounded buffering with blocking can be an important tool coordinating pacing and back pressure, ensuring a system doesn't take on more work than it can achieve." - http://clojure.com/blog/2013/06/28/clojure-core-async-channels.html

expand on the differences of message passing: http://en.wikipedia.org/wiki/Message_passing#Synchronous_versus_asynchronous_message_passing

you CAN have a buffered channel, which is kinda async, but IMO that's kinda message passing?

cons:Another problem related to asynchronous message passing has to do with buffering.

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Communicate

- Message Passing

]

.right-column[

http://en.wikipedia.org/wiki/Message_passing

"Message passing is a way of invoking behavior through some intermediary service or infrastructure.

Rather than directly invoke a process, subroutine, or function by name as in conventional programming, message passing sends a message to a process (which may be an actor or object) and relies on the process and the supporting infrastructure to select and invoke the actual code to run."

]

---

.left-column[

## Concurrency tour

- General concepts / Terminology

- Communicate

- Channels

]

.right-column[

http://en.wikipedia.org/wiki/Channel_%28programming%29

"In computing, a channel is a model for interprocess communication and synchronization via message passing. A message may be sent over a channel, and another process or thread is able to synchronously receive messages sent over a channel it has a reference to,

as a stream."

]

---

name: tour_models

template: inverse

class:middle,center

# Models

- Threads & Mutexes

- Threads & Transactional Memory

- Processes & IPC

- CSP

- Actors

- Futures/Promises/Dataflow

- Evented/co-routines/continuations

???

( http://en.wikipedia.org/wiki/Database-as-IPC and https://blog.engineyard.com/2011/5-subtle-ways-youre-using-mysql-as-a-queue-and-why-itll-bite-you/ )

---

class: center

# Follow along

http://shairosenfeld.com/such_blocking_very_concurrency/

# http://shairosenfeld.com/sbvc/

---

name: table

Let's classify the Concurrency models

| Model |

OS Mechanism |

Scheduling |

Communication |

Concurrent/Parallel |

Example |

| Threads & Mutexes |

Threads |

Preemptive |

Shared Memory (locks) |

C / P |

Mutex |

| Threads & Transactional Memory |

Threads |

Preemptive |

Shared Memory (commit/abort) |

C / P |

STM |

| Threads & Message Passing |

Threads |

Preemptive |

Message Passing |

C / P |

~Anonymous Actors |

| Processes (OS) & IPC |

Processes |

Preemptive |

Shared memory / Message Passing (IPC) |

C / P |

Resque / Forking Webservers |

| CSP (commincating sequential processes) |

Threads / Processes |

Preemptive |

Message Passing (Channels) |

C / P |

Occam / Go channels |

| Actors |

Threads |

Preemptive |

Message Passing (Mailbox) |

C / P |

Erlang / Celluloid |

| Futures / Promises |

Threads |

Cooperative |

Message Passing (itself) |

C / P |

Oz / Dataflow variables |

| Coroutines |

One process/thread |

Cooperative |

? Message Passing |

C (not parallel) |

Fibers |

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

-

Models can be cooerced into being used slightly differently (use message passing instead of channels / implment with processes vs threads / use evented+multiple threads / IPC with shared mem / futures and promises async single threaded / etc), this is a generic attempt to classify main models simply.

-

Parallel means if only _one instance_ of the _model_ is running: can it work in "parallel"? Not "can you parallelize the model", nor "can you parallelize one componment" (eg, thread/actor/etc).

-

Processes are not "concurrent processes", but rather OS processes.

???

- This is a rudimentary attempt to simplify a pretty convoluted and complicated subject, take it as such :)

- was thinking of adding another column that was "Sucks" ?

- TODO put table on github or something, allow some collab editing of it? (since I aint the authority)

---

.left-column[

## Concurrency tour

- Models

- Threads & Mutexes

]

.right-column[

| Threads & Mutexes |

Threads |

Preemptive |

Shared Memory (locks) |

C / P |

Mutex |

]

---

.left-column[

## Concurrency tour

- Models

- Threads & Mutexes

]

.right-column[

| Threads & Mutexes |

Threads |

Preemptive |

Shared Memory (locks) |

C / P |

Mutex |

Atomicity problems?

http://en.wikipedia.org/wiki/Atomicity_%28programming%29

Just use a mutex!

]

???

important to understand, boiled down, atomicity is the underlying problem

of most synchronization issues re concurrency and that's what communicating

(or ensuring immutability, which ensures atomicity isn't needed beacuse we'll never run into that scenario)

aims to solve

---

.left-column[

## Concurrency tour

- Models

- Threads & Mutexes

]

.right-column[

| Threads & Mutexes |

Threads |

Preemptive |

Shared Memory (locks) |

C / P |

Mutex |

```ruby

mutex = Mutex.new

shared_counter = 0

t1 = Thread.new do

mutex.synchronize do

shared_counter += 1

end

end

t2 = Thread.new do

mutex.synchronize do

shared_counter += 1

end

end

t1.join

t2.join

# shared_counter will always be 2

# (w/out mutex we could get 1 as a result)

```

]

---

.left-column[

## Concurrency tour

- Models

- Threads & Mutexes

]

.right-column[

| Threads & Mutexes |

Threads |

Preemptive |

Shared Memory (locks) |

C / P |

Mutex |

## Pros

- Don't have to worry about scheduling (preemtive)

- Common paradigm

- Wide support.

## Cons

- Scheduling overhead (context switching)

- Synchronization/locking issues

- livelock, deadlock, blalock

]

---

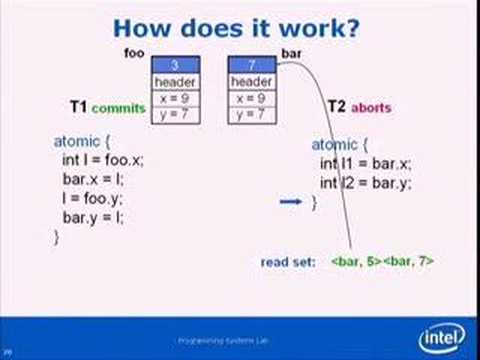

.left-column[

## Concurrency tour

- Models

- Threads & Transactions

]

.right-column[

| Threads & Transactional Memory |

Threads |

Preemptive |

Shared Memory (commit/abort) |

C / P |

STM |

]

---

.left-column[

## Concurrency tour

- Models

- Threads & Transactions

]

.right-column[

| Threads & Transactional Memory |

Threads |

Preemptive |

Shared Memory (commit/abort) |

C / P |

STM |

Similar conceptually to

http://en.wikipedia.org/wiki/Database_transaction

"Transactions provide an "all-or-nothing" proposition, stating that each work-unit performed in a database must either complete in its entirety or have no effect whatsoever. Further, the system must isolate each transaction from other transactions, results must conform to existing constraints in the database, and transactions that complete successfully must get written to durable storage."

]

---

.left-column[

## Concurrency tour

- Models

- Threads & Transactions

]

.right-column[

| Threads & Transactional Memory |

Threads |

Preemptive |

Shared Memory (commit/abort) |

C / P |

STM |

http://en.wikipedia.org/wiki/Software_transactional_memory

"After completing an entire transaction verifies that other threads have not made changes to memory that it accessed in the past...If validation is successful, made permanent, is called a commit."

"May also abort at any time."

]

---

.left-column[

## Concurrency tour

- Models

- Threads & Transactions

]

.right-column[

| Threads & Transactional Memory |

Threads |

Preemptive |

Shared Memory (commit/abort) |

C / P |

STM |

http://stackoverflow.com/questions/1627600/how-do-you-implement-software-transactional-memory

Don't wait on a lock, just check when we're ready to _commit_:

```ruby

Thread1:

atomic {

- read variable

- increment variable

- write variable

}

Thread2:

atomic {

- read variable

- increment variable

# going to write, but Thread1 has written variable...

# notices Thread1 changed data, so ROLLS BACK

- write variable

}

```

Give concurrency the benefit of the doubt it'll probably be fine, most of the time.

"Unlike the locking techniques...STM is very optimistic"

]

---

.left-column[

## Concurrency tour

- Models

- Threads & Transactions

]

.right-column[

| Threads & Transactional Memory |

Threads |

Preemptive |

Shared Memory (commit/abort) |

C / P |

STM |

## Pros

- Increased concurrency

- No thread needs to wait for access to a resource

- Smaller scope that needs synchronizing - modifying disjoint parts of a data structure

- Locks don't compose; STM's benefits are composability and modularity - http://www.haskell.org/haskellwiki/Software_transactional_memory

## Cons

- Aborting transactions

- Places limitations on the behavior of transactions - they cannot perform any operation that cannot be undone, including most I/O.

]

???

Such limitations are typically overcome in practice by creating buffers that queue up the irreversible operations and perform them at a later time outside of any transaction

---

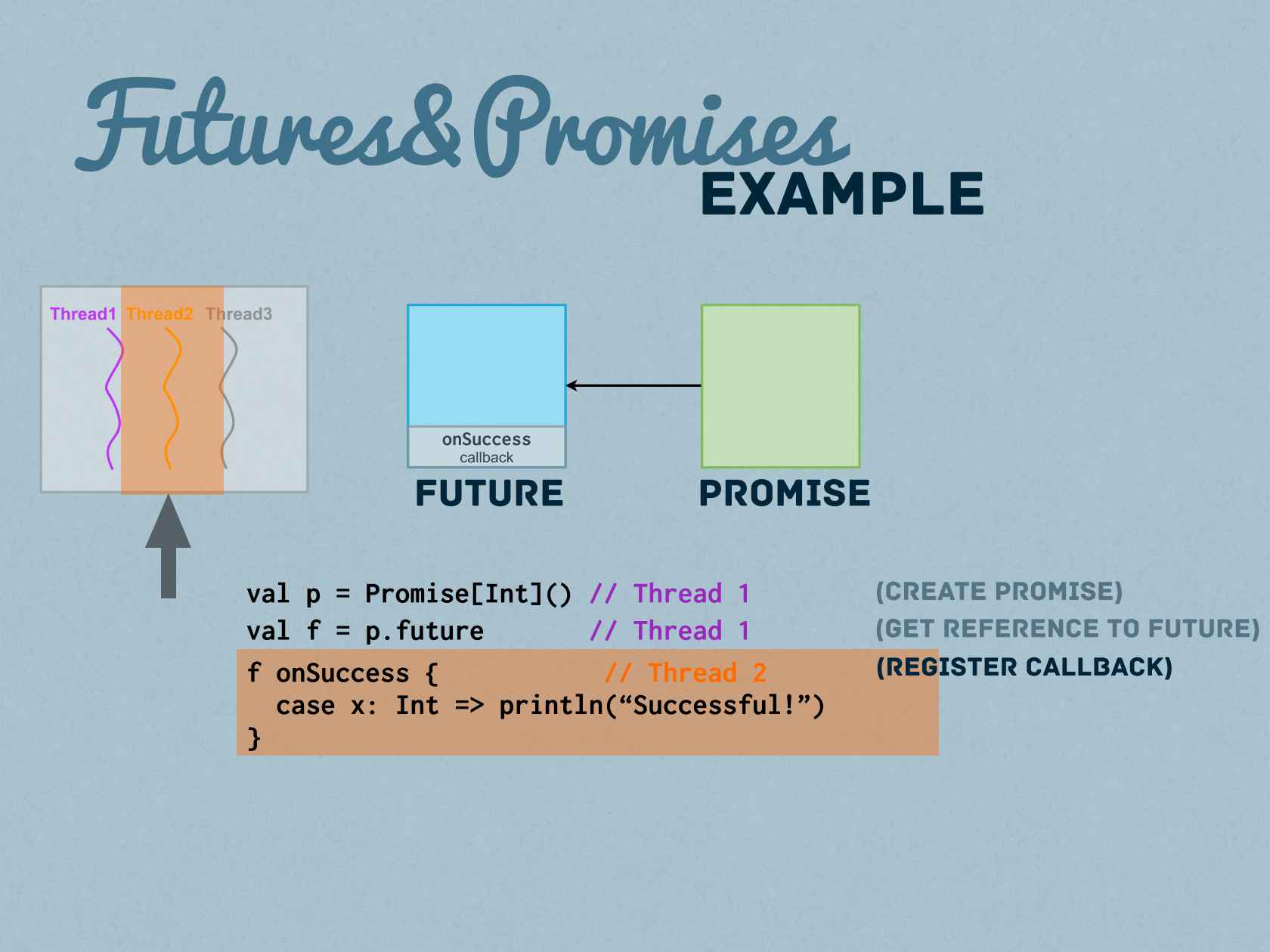

.left-column[

## Concurrency tour

- Models

- Futures / Promises

]

.right-column[

| Futures / Promises |

Threads |

Cooperative |

Message Passing (itself) |

C / P |

Oz / Dataflow variables |

]

???

@CT Futures/Promises

http://en.wikipedia.org/wiki/Futures_and_promises

"They describe an object that acts as a proxy for a result that is initially unknown"

compute a value, either start retrieiving the value before it's asked for or not (explicit vs implicit/eager vs lazy)

if you ask for it and it's not ready, do you block or not (raise for example)

blocking vs non blocking

"The access could block the current thread or process until the future is resolved (possibly with a timeout).

This is the semantics of dataflow variables in the language Oz."

- dataflow

# http://doc.akka.io/docs/akka/current/scala/dataflow.html

from what I can tell, uses promises and futures and differs on blocking semantics

it's a larger model/arch that is about chaining and changing dependent data (calculating Y if X changes automatically)

but it probably has heavy use of futures and promisies.

https://blog.jcoglan.com/2013/03/30/callbacks-are-imperative-promises-are-functional-nodes-biggest-missed-opportunity/

con: http://lambda-the-ultimate.org/node/3221

con: http://doc.akka.io/docs/akka/current/scala/dataflow.html

( The limitation is that the code needs to be side-effect free, i.e. deterministic. You can't use exceptions, time, random etc.,

but need to treat the part of your program that uses dataflow concurrency as a pure function with input and output. )

---

.left-column[

## Concurrency tour

- Models

- Futures / Promises

]

.right-column[

| Futures / Promises |

Threads |

Cooperative |

Message Passing (itself) |

C / P |

Oz / Dataflow variables |

```ruby

require 'celluloid/future'

future_pinger = pinger.future.do_ping

# returned a reference to an async thing

# we can do other calcs here

Bignum.calculate_primes

future_pinger.value # block when called explicitly

# we get a "ping"

ponger.do_pong

# now we get a "pong"

```

Communication is done via ... itself.

When we ask for the value we're telling the ponger to wait for a ping and only then do it.

]

---

.left-column[

## Concurrency tour

- Models

- Futures / Promises

]

.right-column[

| Futures / Promises |

Threads |

Cooperative |

Message Passing (itself) |

C / P |

Oz / Dataflow variables |

## Pros

- Abstracts locks away

## Cons

- Readiness efficiency (wait if it's not ready yet, similar to locks)

http://en.wikipedia.org/wiki/Futures_and_promises#Blocking_vs_non-blocking_semantics

]

---

.left-column[

## Concurrency tour

- Models

- Processes & IPC

]

.right-column[

| Processes (OS) & IPC |

Processes |

Preemptive |

Shared memory / Message Passing (IPC) |

C / P |

Resque / Forking Webservers |

]

---

class:center

.left-column[

## Concurrency tour

- Models

- Processes & IPC

]

.right-column[

| Processes (OS) & IPC |

Processes |

Preemptive |

Message Passing (IPC) |

C / P |

Resque / Forking Webservers |

How do we handle atomicty? Don't share memory.

How do you communicate?

]

???

You coulse use Shared memory but you'll still need locks (shmget)

---

.left-column[

## Concurrency tour

- Models

- Processes & IPC

]

.right-column[

| Processes (OS) & IPC |

Processes |

Preemptive |

Shared memory / Message Passing (IPC) |

C / P |

Resque / Forking Webservers |

## Message Passing (channels)

http://stackoverflow.com/questions/7969557/how-are-message-passing-concurrent-languages-better-than-shared-memory-concurren

"Passing data through a channel to a receiver, implies transfer of ownership of the data."

]

---

.left-column[

## Concurrency tour

- Models

- Processes & IPC

]

.right-column[

| Processes (OS) & IPC |

Processes |

Preemptive |

Shared memory / Message Passing (IPC) |

C / P |

Resque / Forking Webservers |

Yeah Pipes:

```

date | xargs echo "The date is:"

```

Mundane communication:

```ruby

# process 1

increment_shared_data

pipe.write("process 2, I'm done")

# process 2

pipe.read # blocks until process 1 allows to go

increment_shared_data

## no locks! you could argue a blocking read

## is kind of a "lock", but it's just way to sync

##

## in this case, atomicty isn't guaranteed

## but we are explicity communicating so no need

```

Sockets, and more...

]

---

.left-column[

## Concurrency tour

- Models

- Processes & IPC

]

.right-column[

| Processes (OS) & IPC |

Processes |

Preemptive |

Shared memory / Message Passing (IPC) |

C / P |

Resque / Forking Webservers |

## Pros

- Can't corrupt data when data is not shared.

- No locking.

- Easier to scale horizontally (adding nodes).

## Cons

- Can't communicate over shared memory

- Slower to spawn a new process vs a new thread

- More memory overhead.

- Scaling horizontally is $$$ expensive.

]

---

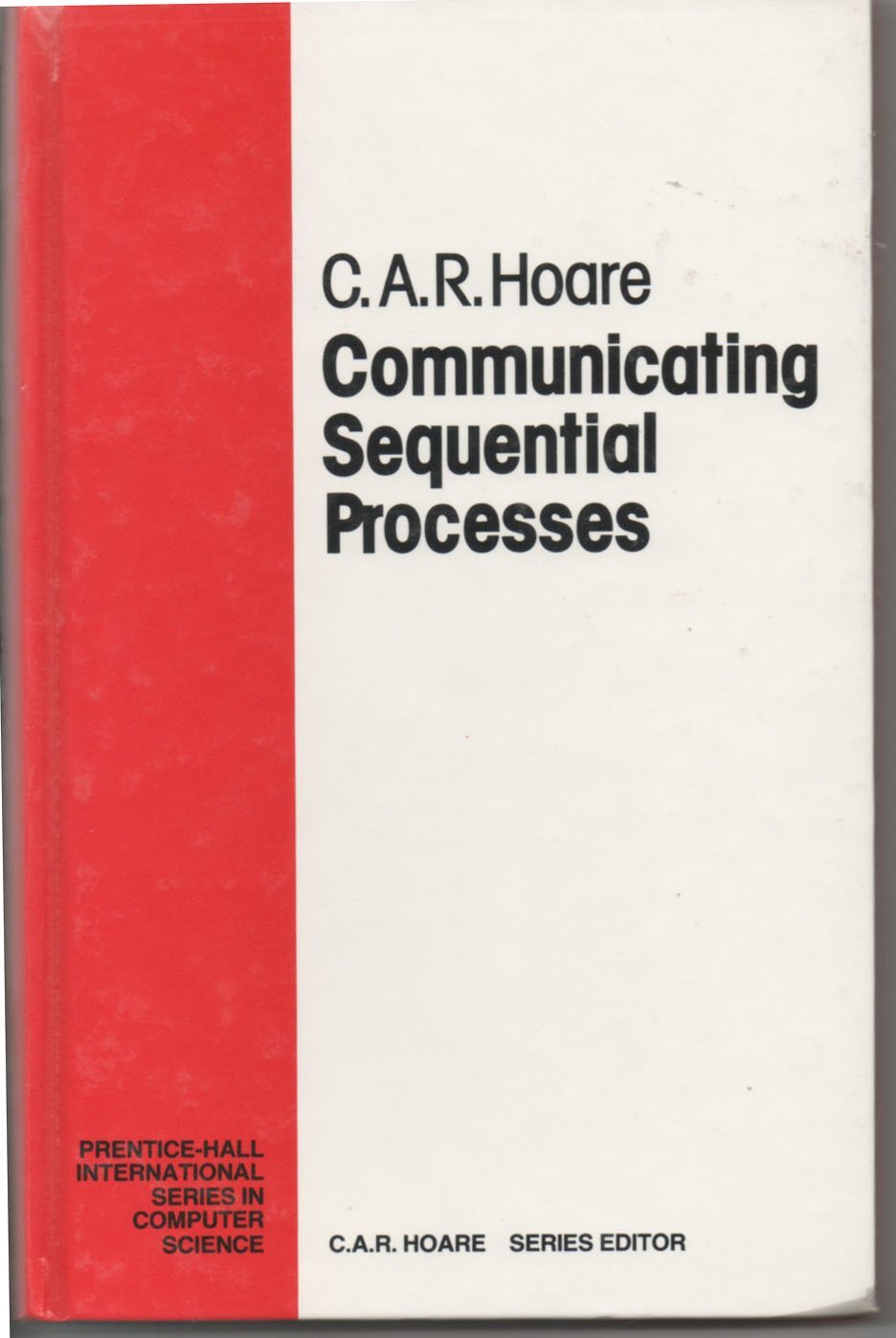

.left-column[

## Concurrency tour

- Models

- CSP

]

.right-column[

| CSP (commincating sequential processes) |

Threads / Processes |

Preemptive |

Message Passing (Channels) |

C / P |

Occam / Go channels |

]

???

closely related to actors

CSP was first described in a 1978 (everything has already been invented), historic interest

Go highly influenced by it

---

.left-column[

## Concurrency tour

- Models

- CSP

]

.right-column[

| CSP (commincating sequential processes) |

Threads / Processes |

Preemptive |

Message Passing (Channels) |

C / P |

Occam / Go channels |

https://gobyexample.com/channels

http://golangtutorials.blogspot.com/2011/06/channels-in-go.html

```go

// Go channels! Similar to pipes in IPC

func makeCakeAndSend(cs chan string) {

i = i + 1

cakeName := "Strawberry Cake " + strconv.Itoa(i)

fmt.Println("Making a cake and sending ...", cakeName)

cs <- cakeName //send a strawberry cake

}

func receiveCakeAndPack(cs chan string) {

s := <-cs //get whatever cake is on the channel

fmt.Println("Packing received cake: ", s)

}

```

Same as IPC, dont need locking because we are waiting for our turn, whenever it is our turn.

]

---

.left-column[

## Concurrency tour

- Models

- CSP

]

.right-column[

| CSP (commincating sequential processes) |

Threads / Processes |

Preemptive |

Message Passing (Channels) |

C / P |

Occam / Go channels |

## Pros

- Uses message passing and channels heavily, alternative to locks

## Cons

- Shared data can be simpler to implement for simple use cases

- Handling very big messages, or a lot of messages, unbounded buffers (backpressure helps this)

- Messaging is essentially a copy of shared data (usually non-issue. Embedded systems?)

]

---

.left-column[

## Concurrency tour

- Models

- Actors

]

.right-column[

| Actors |

Threads |

Preemptive |

Message Passing (Mailbox) |

C / P |

Erlang / Celluloid |

]

---

.left-column[

## Concurrency tour

- Models

- Actors

]

.right-column[

| Actors |

Threads |

Preemptive |

Message Passing (Mailbox) |

C / P |

Erlang / Celluloid |

```ruby

class PingActor

include Celluloid

def ping

sleep rand(2)

puts "ping"

Celluloid::Actor[:ponger].async.pong

end

end

class PongActor

include Celluloid

def pong

puts "pong"

Celluloid::Actor[:pinger].async.ping

end

end

Celluloid::Actor[:pinger] = PingActor.new

Celluloid::Actor[:ponger] = PingActor.new

# start ping ponging

Celluloid::Actor[:pinger].async.ping

puts "this should print first though"

```

]

---

.left-column[

## Concurrency tour

- Models

- Actors

]

.right-column[

| Actors |

Threads |

Preemptive |

Message Passing (Mailbox) |

C / P |

Erlang / Celluloid |

Atomicity? Conflict? Every actor has it's own address space. Don't share memory. Communicate via mailboxes.

"Don’t communicate by sharing state; share state by communicating"

]

---

.left-column[

## Concurrency tour

- Models

- Actors

]

.right-column[

| Actors |

Threads |

Preemptive |

Message Passing (Mailbox) |

C / P |

Erlang / Celluloid |

```ruby

class PingActor

include Celluloid

def ping

sleep rand(2)

puts "ping"

Celluloid::Actor[:ponger].async.pong

end

end

class PongActor

include Celluloid

def pong

puts "pong"

Celluloid::Actor[:pinger].async.ping

end

end

Celluloid::Actor[:pinger] = PingActor.new

Celluloid::Actor[:ponger] = PingActor.new

# start ping ponging

Celluloid::Actor[:pinger].async.ping

puts "this should print first though"

```

]

???

object oriented threads

---

.left-column[

## Concurrency tour

- Models

- Actors

]

.right-column[

| Actors |

Threads |

Preemptive |

Message Passing (Mailbox) |

C / P |

Erlang / Celluloid |

## Comparison with CSP

http://en.wikipedia.org/wiki/Communicating_sequential_processes#Comparison_with_the_Actor_Model

- CSP processes are anonymous, while actors have identities.

- Message-passing in actor systems is fundamentally asynchronous (CSP traditionally uses synchronous messaging: "rendezvous")

- CSP uses explicit channels for message passing, whereas actor systems transmit messages to named destination actors.

]

---

.left-column[

## Concurrency tour

- Models

- Actors

]

.right-column[

| Actors |

Threads |

Preemptive |

Message Passing (Mailbox) |

C / P |

Erlang / Celluloid |

## Pros

- Uses message passing and channels heavily, alternative to locks

- No shared state (avoid locks, easier to scale)

- Easier to reason about the code (maintenance)

## Cons

- When shared state is required doesn't fit as well

- Implementing shared data can be simpler to implement for mundane use cases

- Handling very big messages, or a lot of messages, unbounded buffers (backpressure helps this)

- Messaging is essentially a copy of shared data (usually non-issue. Embedded systems?)

]

---

.left-column[

## Concurrency tour

- Models

- Coroutines

]

.right-column[

| Coroutines |

One process/thread |

Cooperative |

? Message Passing |

C (not parallel) |

Fibers |

]

???

@CT Coroutines / Continuations

http://en.wikipedia.org/wiki/Coroutine

---

.left-column[

## Concurrency tour

- Models

- Coroutines

]

.right-column[

| Coroutines |

One process/thread |

Cooperative |

? Message Passing |

C (not parallel) |

Fibers |

Fibers are coroutines:

```ruby

require 'fiber'

f1 = nil # for scope

f2 = Fiber.new do

loop do

puts "pong"

f1.transfer

end

end

f1 = Fiber.new do

i = 0

loop do

i += 1

sleep rand(2)

puts "ping"

f2.transfer

Fiber.yield if i == 10

end

end

f1.resume

```

Cooperative! Handing execution rights between one another, saving local state.

]

---

.left-column[

## Concurrency tour

- Models

- Coroutines

]

.right-column[

| Coroutines |

One process/thread |

Cooperative |

? Message Passing |

C (not parallel) |

Fibers |

A Curious Course on Coroutines and Concurrency:

David Beazley (https://twitter.com/dabeaz) writing an operating system (!) with only coroutines.

http://dabeaz.com/coroutines/

No Threads, Evented style, just cooperative scheduling of coroutines...

Possible use cases:

http://stackoverflow.com/questions/303760/what-are-use-cases-for-a-coroutine

]

---

.left-column[

## Concurrency tour

- Models

- Coroutines

]

.right-column[

| Coroutines |

One process/thread |

Cooperative |

? Message Passing |

C (not parallel) |

Fibers |

## Pros

- Expressive state: state based computations much easier to understand and implement

- No need for locks (cooperative scheduling)

- Scales vertically (add more cpu)

## Cons

- Single thread: Harder to parallelize/scale horizontally (use more cores, add more nodes)

- Constrained to have all the components work together symbiotically

]

---

.left-column[

## Concurrency tour

- Models

- Evented Programming

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

]

???

@CT Evented style: eventmachine, nodejs

(coroutines/continuations?)

more

etc etc

CAVEAT: evented programming isn't "the best", use the best tool for the job. I just want to understand it

---

name: evented

.left-column[

## Concurrency tour

- Models

- Evented Programming

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

## Examples

- C10k problem

- Eventmachine in ruby

- Twisted in python

- Redis's event loop

- Apache vs Nginx

- Node.js vs the world

- AJAX

- signals? rescuing exceptions?

]

---

name: buildingblocks

.left-column[

## What is evented programming?

- Common building blocks

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

## Common building blocks

- Event Handlers / Callbacks

- Dispatcher: Connecting callbacks, watchers, the loop (event queue/registry)

- Timers

- Event loop

- Reactor / Proactor pattern: handling blocking operations

]

???

CPS style

(XXX sidenote: "A continuation is just that, it's a saved state." - http://c2.com/cgi/wiki?StacklessPython )

CPS:"No procedure is allowed to return to its caller--ever." http://matt.might.net/articles/by-example-continuation-passing-style/

"inversion of control"

]

---

.left-column[

## What is evented programming?

- Common building blocks

- Event Handlers / Callbacks

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

http://en.wikipedia.org/wiki/Event_(computing)#Event_handler

```ruby

# Taken from github @tmm1/http_parser.rb

# uses ry http parser

require "http/parser"

parser = Http::Parser.new

parser.on_headers_complete = proc do

p parser.headers

end

```

```ruby

h[:on_headers_complete] = proc {

puts "inspect all the headers"

}

```

Create "watchers" which store callbacks, of what to do when an event is recognized.

]

---

.left-column[

## What is evented programming?

- Common building blocks

- Dispatcher

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

When something happens that we have a watcher for, that watcher queues an event to act upon, to be executed.

An abstraction of the Queue/Registry of pending events to take action upon is the Dispatcher.

- Event type (timer, io, whatever)

- Event handler (callback)

- Data (IO handles/other)

| name (event type/incoming event) |

what to do (callback) |

IO handle or event specific data (data) |

| headers parsed |

inspect the headers |

Socket fd 4 |

| body chuck received |

add it to the buffer |

Socket fd 4 |

| a timer fired |

execute the timer callback/event handler |

in 666 seconds |

| handle user interrupt |

write logs |

SIGUSR1 |

| justin bieber is playing on the radio |

change station |

Baby Baby Baby |

]

---

.left-column[

## What is evented programming?

- Common building blocks

- Timers

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

[Javascript](http://nodejs.org/api/timers.html) example:

```javascript

// setTimeout() or setInterval

// timer + callback (event handler)

//

var myVar=setInterval(function(){myTimer()},1000);

function myTimer()

{

alert("boo");

}

```

[EventMachine](http://eventmachine.rubyforge.org/EventMachine/Timer.html):

```ruby

EM.set_timer(1) {

do_thing

}

```

[Python Twisted](https://twistedmatrix.com/documents/12.3.0/core/howto/time.html):

```python

def runEverySecond():

print "a second has passed"

l = task.LoopingCall(runEverySecond)

l.start(1.0) # call every second

```

]

---

.left-column[

## What is evented programming?

- Common building blocks

- Event loop

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

http://en.wikipedia.org/wiki/Event_loop AKA the main loop

Redis's event loop:

```c

// redis.c main() calls aeMain(server.el);

void aeMain(aeEventLoop *eventLoop) {

eventLoop->stop = 0;

while (!eventLoop->stop) {

if (eventLoop->beforesleep != NULL)

eventLoop->beforesleep(eventLoop);

aeProcessEvents(eventLoop, AE_ALL_EVENTS);

}

}

```

Twisted's main loop (twisted/internet/base.py)

```python

def mainLoop(self):

while self._started:

try:

while self._started:

# Advance simulation time in delayed

# event processors.

self.runUntilCurrent()

t2 = self.timeout()

t = self.running and t2

self.doIteration(t)

```

]

---

.left-column[

## What is evented programming?

- Common building blocks

- Reactor Pattern

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

http://en.wikipedia.org/wiki/Reactor_pattern

```ruby

loop do

IO.select([socket])

# socket is ready!

# this will ALWAYS be instantaneous

handle_socket

end

```

when something is _ready_ to do work, call the dispatcher and do the work!

]

???

http://stackoverflow.com/questions/9138294/what-is-the-difference-between-event-driven-model-and-reactor-pattern

---

.left-column[

## What is evented programming?

- Common building blocks

- Proactor Pattern

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

http://en.wikipedia.org/wiki/Proactor_pattern

Do the work _immediately_, pass a callback to it to be executed when it's done.

```ruby

# more at github @methodmissing/eio

def test_open_close_with_proc_cb

closed = false

ccb = Proc.new{ closed = true }

cb = Proc.new do |fd|

EIO.close(fd, &ccb)

end

EIO.open(__FILE__, &cb)

EIO.wait

assert closed

end

```

Another example: Windows Completion Ports

http://stackoverflow.com/questions/9138294/what-is-the-difference-between-event-driven-model-and-reactor-pattern

]

???

http://methodmissing.github.io/eio/

---

name:libev

.left-column[

## What is evented programming?

- Common building blocks

- Putting it together: Typical evented program

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

Gory details of what the libev loop (http://doc.dvgu.ru/devel/ev.html#synopsis) does:

- Before the first iteration, call any pending watchers.

- If EVFLAG_FORKCHECK was used, check for a fork.

- If a fork was detected, queue and call all fork watchers.

- Queue and call all prepare watchers.

- If we have been forked, recreate the kernel state.

- Update the kernel state with all outstanding changes.

- Update the "event loop time".

- Calculate for how long to sleep or block, if at all

(active idle watchers, EVLOOP_NONBLOCK or not having

any active watchers at all will result in not sleeping).

- Sleep if the I/O and timer collect interval say so.

- Block the process, waiting for any events.

- Queue all outstanding I/O (fd) events.

- Update the "event loop time" and do time jump handling.

- Queue all outstanding timers.

- Queue all outstanding periodics.

- If no events are pending now, queue all idle watchers.

- Queue all check watchers.

- Call all queued watchers in reverse order (i.e. check watchers first)

Signals and child watchers are implemented as I/O watchers, and will

be handled here by queueing them when their watcher gets executed.

- If ev_unloop has been called, or EVLOOP_ONESHOT or EVLOOP_NONBLOCK

were used, or there are no active watchers, return, otherwise

continue with step 2.

]

---

.left-column[

## What is evented programming?

- Common building blocks

- Putting it together: Typical evented program

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

A simplified version of most event loops:

```ruby

loop do

process_events_on_the_queue

# process_timers, handle_io, handle_signals, etc

wait_for_events_and_enqueue

end

```

queue == dispatcher concept

]

---

name: proscons

.left-column[

## Evented Programming

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

## Pros

- Avoid polling

- CPU bound vs IO bound

- Don't do what you don't need to do

- Expanding your horizons (very different paradigms)

- Scales well vs spawning many threads (caveat)

## Cons

- You block the event loop, all goes bad

- Program flow is "spaghetti"-ish

- Callback Hell

- Hard to debug, you loose "the stack"

- Closures/First class functions

- Rely heavily on state

]

---

.left-column[

## Beyond C10k

]

.right-column[

| Evented |

One process/thread |

Cooperative |

? shared memory ? |

C (not parallel) |

Eventmachine |

## Sidenote: C10M

http://highscalability.com/blog/2013/5/13/the-secret-to-10-million-concurrent-connections-the-kernel-i.html

http://c10m.robertgraham.com/p/manifesto.html

http://c10m.robertgraham.com/2013/02/wimpy-cores-and-scale.html

http://www.youtube.com/watch?v=D09jdbS6oSI

]

---

template:inverse

# Conclusions

---

class:center,middle

.left-column[

## Conclusion

]

.right-column[

## All the things aren't black and white

]

---

class:center,middle

.left-column[

## Conclusion

]

.right-column[

## All the things aren't black and white

]

---

.left-column[

## Use the best tool

### Some examples

]

.right-column[

### Threads/Locks:

- Implement other models

- Simple programs

### Actor model:

- Facebook chat application, Ericson AXD 301 switch,

- telephone, RabbitMQ: messaging system,

- EJabberd XMPP chat,

- SMS systems (WhatsApp uses Erlang)

### CSP (golang):

- https://gist.github.com/ungerik/3731476

### Evented Model:

- Your Browser

]

???

Actors:

Lots of entities sending messages to each other

Evented:

Lots of idle waiting for external event to happen (keyboard)

http://www.slideshare.net/drorbr/the-actor-model-towards-better-concurrency

---

.left-column[

## Conclusion

]

.right-column[

## USE THE BEST TOOL FOR THE JOB

]

---

.left-column[

## Conclusion

]

.right-column[

## Keep on learning

]

---

name: last-page

template: inverse

## That's all, folks.

Slideshow created using [remark](http://github.com/gnab/remark).

---

name: questions

template: inverse

## Questions?

(Is there time?!)

Slideshow created using [remark](http://github.com/gnab/remark).

---

name: resources

.left-column[

## resources:

]

.right-column[

- OS mechanisms

http://stackoverflow.com/questions/3324643/processes-threads-green-threads-protothreads-fibers-coroutines-whats-the

- Concurrency vs Parallelism

http://blog.golang.org/concurrency-is-not-parallelism

http://en.wikipedia.org/wiki/Flynn%27s_taxonomy

- Cooperative

http://www.igvita.com/2009/05/13/fibers-cooperative-scheduling-in-ruby/

http://masanjin.net/blog/fibers

- Message passing/Channels

http://en.wikipedia.org/wiki/Message_passing#Synchronous_versus_asynchronous_message_passing

http://www.dalnefre.com/wp/2010/07/message-passing-part-1-synchronous-rendezvous/

http://clojure.com/blog/2013/06/28/clojure-core-async-channels.html (async channels)

]

---

- Seven concurrency models in seven weeks (book)

http://pragprog.com/book/pb7con/seven-concurrency-models-in-seven-weeks

- Producer Consumer

http://en.wikipedia.org/wiki/Producer%E2%80%93consumer_problem

- Evented

https://gist.githubusercontent.com/sandal/3612925/raw/315e7bfc5de7a029606b3885d71953acb84f112e/ChatServer.rb broken link taken from https://practicingruby.com/articles/event-loops-demystified

http://doc.dvgu.ru/devel/ev.html#synopsis (libev)

http://www.slideshare.net/simon/evented-io-based-web-servers-explained-using-bunnies

- IO types

http://www.ibm.com/developerworks/library/l-async/

- Async vs Sync

https://gist.github.com/jamesob/9548827

- Good select/epoll explanation (nelson elhage)

http://www.quora.com/Network-Programming/How-is-epoll-implemented

http://www.quora.com/Network-Programming/How-is-select-implemented

---

- CSP

http://www.igvita.com/2010/12/02/concurrency-with-actors-goroutines-ruby/

http://www.usingcsp.com/cspbook.pdf

http://assets.cs.ncl.ac.uk/seminars/224.pdf

http://www.cs.pomona.edu/~kim/cs334/s00/Lectures/Lec23/sld031.htm

http://en.wikipedia.org/wiki/Communicating_sequential_processes#Comparison_with_the_Actor_Model

- Go

https://www.youtube.com/watch?v=f6kdp27TYZs

http://tour.golang.org/#1

http://joneisen.tumblr.com/post/38188396218/concurrency-models-go-vs-erlang

- Actors

http://www.slideshare.net/mperham/actors-and-threads

http://learnyousomeerlang.com/content

- STM

http://stackoverflow.com/questions/209751/any-real-world-experience-using-software-transactional-memory

http://www.youtube.com/watch?v=tYRIg4M5xNM

http://book.realworldhaskell.org/read/software-transactional-memory.html

---

- Video "Erlang the movie"

http://www.youtube.com/watch?v=uKfKtXYLG78

- C10k? C10M:

http://c10m.robertgraham.com/p/blog-page.html

http://highscalability.com/blog/2013/5/13/the-secret-to-10-million-concurrent-connections-the-kernel-i.html

https://news.ycombinator.com/item?id=7180672

A talk about concurrency patterns boiled down. An online slideshow of 'Such blocking very concurrency, wow' given at ScaleConf.